In summary

- Google Maps getting Gemini

- Find places along your route

- Complex conversational abilities

- Most new features US-only

Google Maps is receiving a massive free upgrade that will employ Gemini AI to improve navigation – but not everyone will be getting it.

Google chief Sundar Pichai took to X recently to reveal that “Gemini has arrived as your hands-free driving assistant” in the Google Maps app.

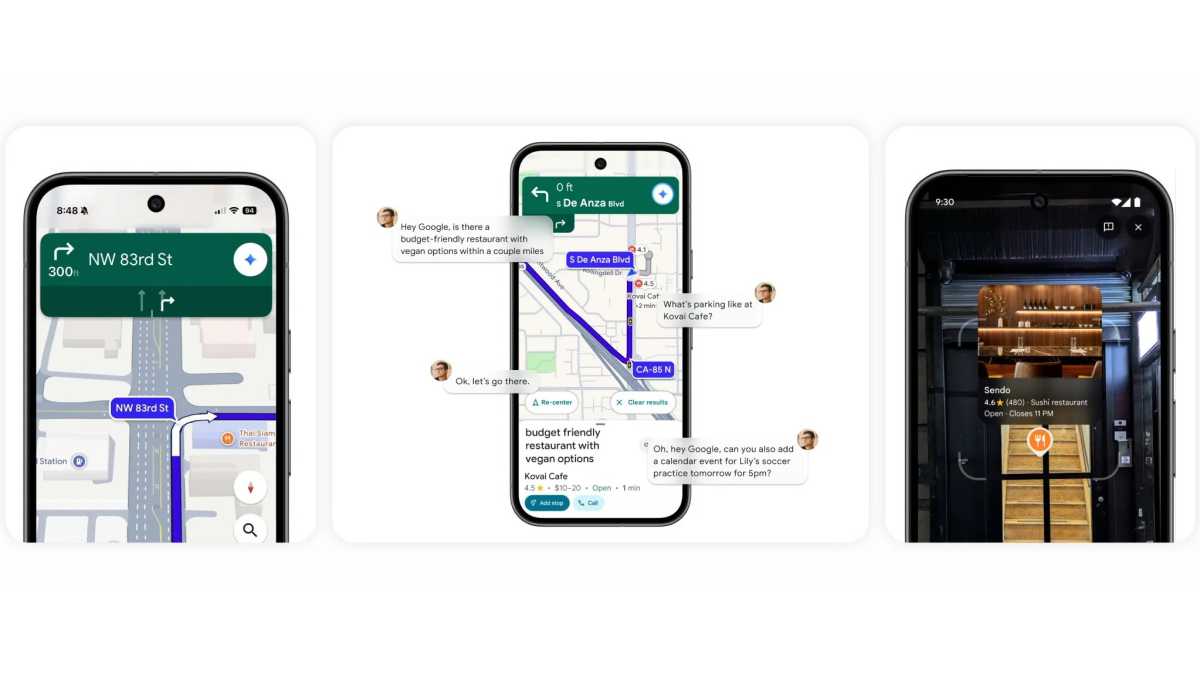

Google’s AI assistant will be able to find places along your route, check for EV charging availability, and share your expected arrival time, simply by asking using natural language.

As Pichai points out, it’ll be able to parse complex requests like “find me a restaurant that serves vegetarian tacos within a couple of miles that has good parking.”

The new Google Maps with Gemini will be able to supply landmarks to help make navigation even easier, rather than just relying on distances.

Google Maps is getting smarter

Over on the Google blog, director of products Amanda Leicht Moore has shared more.

What with Gemini being able to work across apps, you’ll be able to add requests like “Oh, by the way, can you also add a calendar event for soccer practice tomorrow for 5 p.m.?”, and it’ll connect up to Calendar.

Reporting accidents and disruptions should also be easier. So, you’ll be able to say things like “I see an accident,” “Looks like there’s flooding ahead” and “Watch out for that slowdown”, and Gemini will mark it up accordingly.

Gemini in navigation will commence rolling out in the coming weeks on Android and iOS wherever Gemini is available, with Android Auto support coming up.

US-only additions

As for the aforementioned landmark-based navigation, it’s rolling out now on Android and iOS – but only in the U.S. The rest of us will have to keep being told when to turn in feet, like idiots.

The ability to receive proactive traffic alerts (alerting you of disruptions to your route even if you’re not navigating) is also rolling out now, but only to US Android users.

Finally, Google Maps is now using Gemini to offer conversations on the services a destination offers. So, you can ask it what the most highly rated dishes are at a restaurant, or if a bakery you carries French butter croissants.

Lens has also been implemented, allowing you to hold your phone’s camera up to a location and then quiz Google’s assistant on what it has to offer. It’ll be available later this month to Android and iOS users, but – you guessed it – only in the US.

Google has been steadily rolling its Gemini AI assistant out to more of its services and devices of late, including its Nest speakers and cameras.

Link do Autor